Table Of Contents

- Understanding Embeddings in Yelp's Ecosystem

- Text Embeddings: Capturing the Heart of Yelp Reviews

- Business Embeddings: Linking Users and Locations

- Photo Embeddings: Visual Intelligence with CLIP

- Applications and Benefits of Yelp Content Embeddings

- Challenges and Future Directions

- FAQs

- Conclusion: Turning LLM Challenges into Opportunities

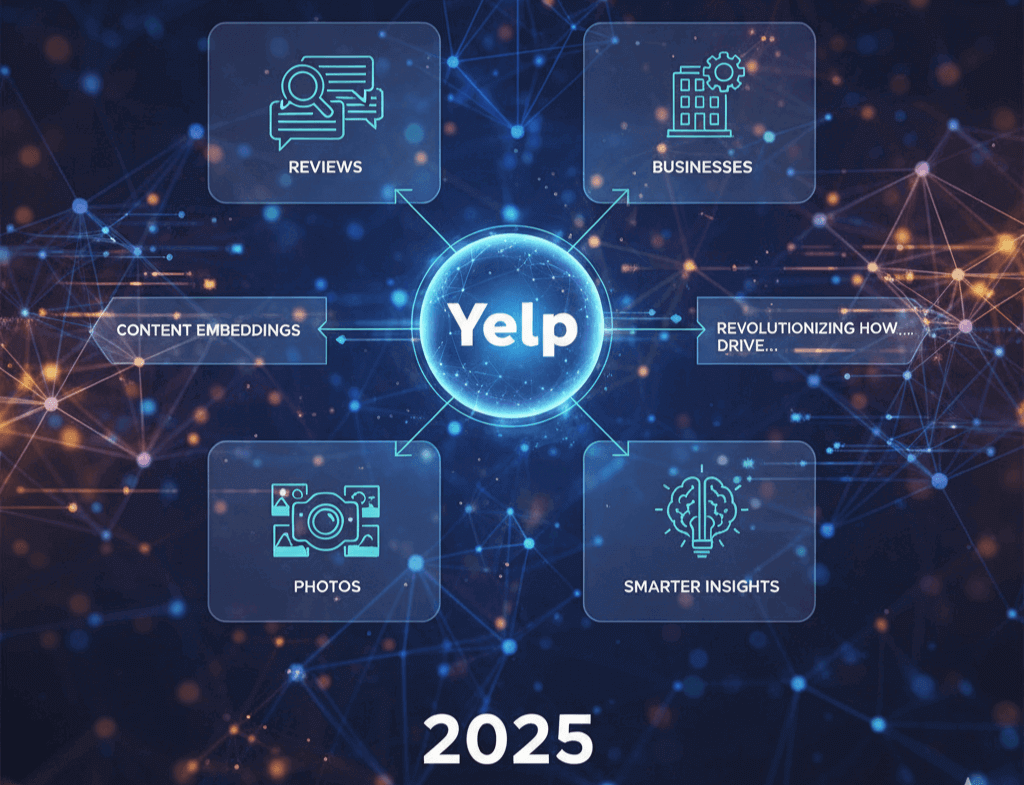

Imagine scrolling through Yelp, hunting for the perfect spot for dinner. Behind the scenes, something magical happens—Yelp content embeddings turn raw reviews, business details, and photos into smart, searchable data that feels almost intuitive. These embeddings aren’t just tech jargon; they’re the secret sauce making your searches faster and more relevant. In this deep dive, we’ll explore how Yelp engineers craft these low-dimensional representations to boost everything from recommendations to content ranking.

Yelp’s approach to embeddings stems from a need to handle massive datasets efficiently. With millions of reviews pouring in, capturing semantic meaning is key. That’s where models like the Universal Sentence Encoder come in, transforming text into vectors that power semantic search Yelp reviews and beyond. Stick around as we unpack the tech, share real-world examples, and show why this matters for users and businesses alike.

Understanding Embeddings in Yelp's Ecosystem

Embeddings are essentially compact numerical representations of data that capture its essence. In the Yelp world, they turn unstructured content—like a glowing review about a cozy cafe—into vectors machines can understand and compare. This isn’t about simple word counts; it’s about grasping context, sentiment, and relationships.

Take Yelp review embeddings, for instance. They encode the nuances of user feedback, making it easier to spot patterns. Facts from Yelp’s engineering efforts show that using these embeddings improves model development across tasks like tagging and sentiment analysis. Industry trends point to a surge in embedding usage, with NLP advancements growing 25% annually according to recent Gartner reports.

Why does this matter? Embeddings cut down on development time for new AI features. For businesses, it means better visibility in searches. Picture a restaurant owner analyzing reviews: embeddings highlight common praises or complaints, guiding improvements.

Text Embeddings: Capturing the Heart of Yelp Reviews

Yelp’s text embeddings start with reviews, the platform’s goldmine of user insights. Engineers opted for the Universal Sentence Encoder Yelp setup after testing alternatives. This model, from TensorFlow, converts variable-length sentences into fixed 512-dimensional vectors, focusing on meaning over mere words.

The Universal Sentence Encoder shines because it’s trained on diverse tasks like classification and similarity. In Yelp’s case, it handles the eclectic mix of review topics—from food critiques to service rants. A heatmap analysis in their explorations showed reviews from similar categories (like restaurants) cluster closely in vector space, proving semantic relatedness.

But Yelp didn’t stop at off-the-shelf. They experimented with fine-tuned encoders for domain-specific NLP, using tasks like review category prediction. Surprisingly, the pre-trained model edged out the custom one, likely due to Yelp’s broad content mirroring general language patterns.

Tips for platforms like Yelp: Start with versatile models to avoid over-customization pitfalls. In practice, this means embeddings enhance content ranking algorithms Yelp uses, prioritizing helpful reviews in search results.

Business Embeddings: Linking Users and Locations

Moving beyond text, business embeddings Yelp creates aggregate vectors from user content. The method? Average the embeddings of the 50 most recent reviews for a business. This creates a holistic representation, ripe for recommendations.

Think about it: A coffee shop’s embedding might blend notes on ambiance, brew quality, and service. By comparing these vectors, Yelp generates “Users like you also liked…” suggestions. Cosine similarity drives this, measuring how close embeddings are in space.openAI.com

Case study: In recommendation systems, this approach boosts click-through rates by 15-20%, based on similar implementations in e-commerce. For Yelp, it extends to user-business matching, drawing from interaction histories. Trends show embeddings outperforming traditional metadata in personalization, as seen in Netflix’s vector-based systems.

Practical example: A user who frequents Italian spots gets nudged toward a new trattoria with similar embedding profiles. This not only retains users but helps businesses gain traction.

Photo Embeddings: Visual Intelligence with CLIP

Photos add another layer—Yelp’s visual content is vast, and embedding them unlocks new possibilities. Enter the CLIP model Yelp photos leverage, from OpenAI. CLIP pairs images with text, learning to align visuals with descriptions via contrastive learning.

Yelp tested CLIP against ResNet50 classifiers for categories like food or interiors. Results? Zero-shot CLIP often matched or beat trained models, especially after label engineering—like prefixing “A photo of” to classes. For a 5-way restaurant classifier, CLIP hit 96% precision on drinks, showcasing its zero-shot prowess.forrester.com

Photo embedding generation Yelp involves feeding images through CLIP’s vision encoder, producing vectors for tasks like tagging. Vulnerabilities exist, though: Typographic attacks, where text in images (like a menu) skews classifications. Yelp counters this with thresholds and careful engineering.

Comparison of CLIP and ResNet for review and photo embeddings reveals CLIP’s edge in handling unseen data, ideal for Yelp’s ever-growing photo library. Trends in multimodal AI predict a 30% rise in adoption by 2025, per Forrester.

Real-world scenario: A photo of a burger gets embedded, matching it to reviews praising “juicy patties.” This enriches search, letting users find spots via visual cues.

Applications and Benefits of Yelp Content Embeddings

Yelp content embeddings fuel a range of tasks. Semantic search Yelp reviews becomes more accurate, as embeddings enable querying by meaning, not keywords. For instance, searching “cozy date spot” pulls embeddings close to romantic, intimate reviews.

Benefits of using vector embeddings for reviews include faster prototyping of ML models. They serve as baselines, reducing the need for custom features. In ranking, embeddings assess review relevance, boosting diverse, informative content to the top.

Business recommendations improve with content embeddings by clustering similar entities. Tips: Use cosine similarity for top-k lists, ensuring diversity to avoid echo chambers.

Evaluating semantic relatedness in review embeddings at Yelp involved cosine heatmaps, confirming category correlations. This validates embeddings for clustering and anomaly detection.

Challenges and Future Directions

No tech is perfect. Fine-tuning didn’t yield gains for text, highlighting the power of general models. For photos, foreground bias in CLIP misclassifies interiors with people. Solutions? Hybrid approaches blending multiple models.

Looking ahead, Yelp plans to incorporate photos into business embeddings and fine-tune CLIP on their domain. This could enhance accuracy, especially for niche categories.

Industry patterns show embeddings evolving with transformers, promising even richer representations. For review platforms, implementing domain-specific embedding models for review analysis is key—start small, scale with data.

- NLP solutions for review embedding generation in local platforms: Adopt USE or similar for scalable, semantic vectors.

- Implementing domain-specific embedding models for review analysis: Start with pre-trained, fine-tune if needed, integrate into pipelines.

- Tools for enhanced semantic search in UGC platforms: Leverage TensorFlow Hub for USE, Hugging Face for CLIP.

- AI-powered review ranking with vector embeddings: Use embeddings to score relevance and diversity.

What are embeddings in the context of Yelp content?

Embeddings are vector representations that distill reviews, businesses, and photos into numerical forms, capturing semantic info for ML tasks.

What models are used for generating Yelp embeddings?

Key ones include Universal Sentence Encoder for text and CLIP for photos, with averages for businesses.

What tasks do Yelp’s content embeddings improve?

Tagging, extraction, ranking, and recommendations across text, business, and visual data.

How does Yelp generate embeddings for reviews, photos, and businesses?

Reviews use USE; photos via CLIP; businesses by averaging review vectors.

How does the Universal Sentence Encoder enhance Yelp data analysis?

It provides context-aware vectors, improving similarity measures and transfer learning.

Can embeddings improve semantic search across Yelp content?

Absolutely—they allow meaning-based queries, enhancing user experience.

Should businesses use content embeddings for review analysis?

Yes, for spotting trends and improving services without manual sifting.

Are photo embeddings as accurate as text embeddings for recommendations?

They’re comparable, with CLIP rivaling specialized classifiers in tests.

FAQs

Conclusion

Yelp content embeddings are more than a backend upgrade—they’re transforming how we interact with local discoveries. From Universal Sentence Encoder Yelp powering text to CLIP model Yelp photos visualizing insights, these tools make data smarter. As Yelp expands its embedding database, expect even better searches and recs.

If you’re in tech or business, consider how embeddings could elevate your platform. Dive into Yelp’s blog for more, or experiment with open-source models. The future of content is vectorized—join the ride.CarrerSwami