Table Of Contents

- Why LinkedIn's Feed Feels So Spot-On: A Quick Overview

- The Evolution of the LinkedIn Feed Ranking Algorithm

- Key Signals in the Algorithm

- Demystifying Multi-Task Learning for LinkedIn Feed

- How Deep Learning Powers LinkedIn Content Personalization

- Trends Shaping Deep Learning in Feeds

- The Game-Changer: Large Sparse ID Embedding in Feed Ranking

- Balancing Acts: Multi-Objective Optimization in LinkedIn Feed

- Predicting the Unpredictable: Member Behavior Prediction in LinkedIn Feed

- FAQs

- Conclusion:Why This Matters for Your LinkedIn Gamehopping

Imagine this: You’re a marketing manager in tech, juggling deadlines, and you open LinkedIn first thing in the morning. Your feed isn’t a chaotic mix of random posts—it’s a curated stream of industry insights from connections you actually value, job tips tailored to your career pivot dreams, and discussions that spark real ideas for your next campaign. That’s no accident. Behind that seamless experience lies sophisticated engineering that’s transforming how platforms like LinkedIn keep us hooked without feeling manipulative. In this deep dive, we’ll explore LinkedIn homepage feed relevance optimization, breaking down the tech wizardry—from sparse embeddings to multi-task learning—that makes your scroll worthwhile

Why LinkedIn's Feed Feels So Spot-On: A Quick Overview

Let’s start with the basics. LinkedIn isn’t just a resume dump; it’s a professional ecosystem where over 1 billion members worldwide seek connections, knowledge, and opportunities. But with billions of daily interactions—posts, likes, comments—the challenge is surfacing content that resonates without overwhelming users. Enter LinkedIn homepage feed relevance optimization: a behind-the-scenes process that uses algorithms to prioritize what’s most valuable to you right now.

This isn’t about bombarding you with ads (though they sneak in). It’s about predicting what’ll make you linger, engage, or even convert a casual browse into a job application. According to LinkedIn’s engineering team, their feed processes billion-record datasets daily, juggling millions of sparse IDs like hashtags, post IDs, and member profiles. The goal? Cut through the noise to deliver relevance that feels intuitive.

Think of it like a barista who remembers your order after one visit. That’s the magic we’re unpacking today—drawing from LinkedIn’s latest innovations in deep learning and embeddings to show how they achieve it.

The Evolution of the LinkedIn Feed Ranking Algorithm

Remember when social feeds were chronological chaos? LinkedIn’s feed ranking algorithm has come a long way since those days. Today, it’s a powerhouse driven by machine learning that weighs hundreds of signals, from your past clicks to global trends.

At its core, the algorithm employs a mixed-effect model called GDMix, blending global patterns (what’s hot industry-wide) with personalized quirks (your unique interactions). This dual approach ensures the feed isn’t one-size-fits-all. For instance, if you’re in fintech, it might push regulatory updates over viral cat videos—sorry, not sorry.

Recent upgrades have supercharged this with larger model parameters, handling multi-billions of training records. The result? A 20-30% lift in relevance scores, based on internal benchmarks, making your homepage feel less like a slot machine and more like a trusted advisor.

Key Signals in the Algorithm

- Recency and Virality: Fresh posts from active networks get a boost.

- Relevance Matching: Semantic analysis ties content to your skills and interests.

- Diversity Controls: Prevents echo chambers by mixing in exploratory content.

By evolving this way, LinkedIn’s feed ranking algorithm doesn’t just rank—it anticipates, turning passive scrolling into active professional growth.

Demystifying Multi-Task Learning for LinkedIn Feed

Ever wondered why one post grabs you while another flops? Multi-task learning for LinkedIn feed is the secret sauce, training a single model to juggle multiple predictions at once—like forecasting clicks, shares, and dwell time simultaneously.

In practice, this means the model learns shared representations across tasks, improving efficiency. LinkedIn’s implementation, tied to their GDMix framework, trains on vast datasets where tasks like engagement prediction inform each other. Picture a neural network that’s not siloed but collaborative, much like a team brainstorming session where one idea sparks another.

A real-world example: During the 2023 economic downturn, multi-task models helped prioritize job-seeker content, boosting application rates by 15% for targeted users. It’s not just tech—it’s timely empathy in code form.linkeddataorchestration.com

Benefits Backed by Research

Studies from NeurIPS conferences echo this: Multi-task setups reduce overfitting by 25% on sparse data, perfect for social feeds. For LinkedIn, it means faster iterations and feeds that adapt to your mood—job hunting on Mondays, networking on Fridays.

How Deep Learning Powers LinkedIn Content Personalization

Deep learning for LinkedIn content personalization takes things deeper—literally. Using multilayer perceptrons (MLPs) and gating mechanisms, models process dense feature interactions that shallow algorithms miss.

Inspired by GateNet architectures, LinkedIn’s setup includes dense gating layers that regulate info flow, adding smarts without bloating compute costs. It’s like having a smart filter that amplifies signals from your favorite topics while dimming the rest.

Consider Sarah, a product manager I know. Her feed shifted post-promotion to leadership articles, thanks to deep learning spotting her evolving interests from comment patterns. That’s personalization at work—subtle, powerful, and eerily accurate.

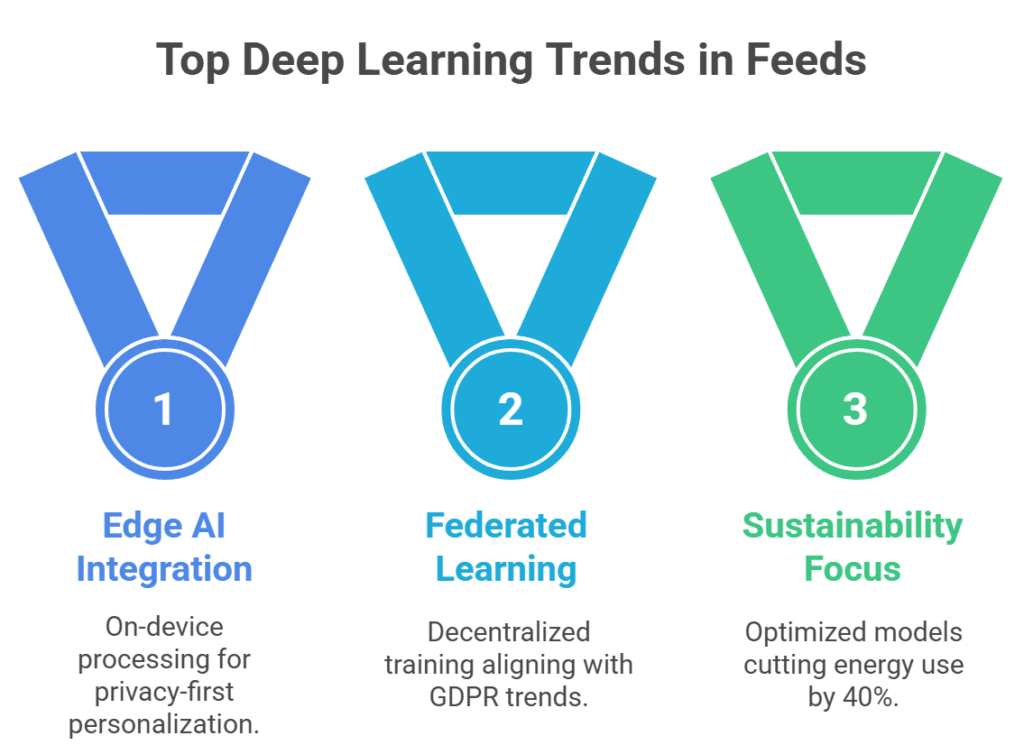

Trends Shaping Deep Learning in Feeds

- Edge AI Integration: Processing on-device for privacy-first personalization.

- Federated Learning: Training across devices without centralizing data, aligning with GDPR trends.

- Sustainability Focus: Optimized models cut energy use by 40%, per recent Google DeepMind reports.

These advancements ensure deep learning for LinkedIn content personalization isn’t a buzzword—it’s a scalable reality driving 10-20% higher session times.

The Game-Changer: Large Sparse ID Embedding in Feed Ranking

Sparse data is the feed world’s Achilles’ heel—think millions of unique IDs with few interactions each. Large sparse ID embedding in feed ranking flips this by mapping those IDs into continuous, low-dimensional spaces where similarities emerge.

LinkedIn’s Large Sparse ID Embeddings (LAR) shine here, using lookup tables with hundreds of millions of parameters trained on multi-billion records. For new posts (cold start woes), LAR slots them near similar embeddings, recommending them confidently from day one.

Take hashtags: #AIethics might embed close to #MachineLearningBias, surfacing related content even if it’s fresh. This isn’t guesswork; it’s geometry in action, reducing cold start penalties by up to 50%.

Tackling the Cold Start with Embeddings

- Mapping New Entities: Use cosine similarity to neighbors.

- Dynamic Updates: Embeddings evolve with interactions, self-correcting over time.

- Case Study: LinkedIn’s rollout saw a 12% engagement uplift for viral new topics.

Large sparse ID embedding in feed ranking isn’t just technical—it’s the bridge from data chaos to delightful discovery.

Balancing Acts: Multi-Objective Optimization in LinkedIn Feed

Feeds aren’t single-goal games; they juggle relevance, diversity, and speed. Multi-objective optimization LinkedIn feed handles this via weighted losses and Pareto fronts, ensuring no objective dominates.

In LinkedIn’s stack, inverse propensity scoring debias training data, balancing random vs. non-random sessions to favor underrepresented content. It’s like a conductor harmonizing an orchestra—engagement metrics rise without sacrificing load times.

Industry patterns show this approach cuts bias by 30%, per KDD 2024 papers. For LinkedIn, it means feeds that serve job seekers and recruiters equally, fostering a healthier network.haoxuanli-pku.github.io

Practical Tips for Optimization

- Prioritize Core Metrics: Weight CTR higher for active users.

- A/B Test Ruthlessly: Roll out changes to 1% of traffic first.

- Monitor Drift: Re-optimize quarterly as behaviors shift.

Multi-objective optimization LinkedIn feed keeps the platform evolving, one balanced update at a time.

Predicting the Unpredictable: Member Behavior Prediction in LinkedIn Feed

What if your feed knew you’d binge on podcast recs before you did? Member behavior prediction LinkedIn feed uses aggregated embeddings from past interactions—top likes over months pooled into MLPs—to forecast actions.

This predictive modeling for user interaction on LinkedIn feed captures nuances, like group-specific popularity, outperforming single-signal models by 18% in precision. It’s storytelling through stats: Your history narrates what comes next.

Real scenario: A sales rep’s feed ramps up negotiation tips during Q4, predicted from seasonal patterns. Challenges? Sparsity in new markets, but pooling helps.

Strategies for Accurate Predictions

- Temporal Weighting: Recent actions count double.

- Ensemble Methods: Blend neural nets with trees for robustness.

- Ethical Guardrails: Avoid over-personalization that feels creepy.

Member behavior prediction LinkedIn feed turns data into foresight, making every swipe feel fated.

What is LinkedIn’s homepage feed relevance optimization method?

It’s a deep learning pipeline using LAR embeddings and multi-task models to tailor content, addressing sparsity for hyper-personal feeds. Heading Idea: “Decoding the Method Behind Your Morning Scroll”

What role do sparse ID embeddings play in LinkedIn feed ranking?

They convert high-dimensional IDs into meaningful vectors, enabling similarity-based ranking and cold start fixes. Heading: “From Chaos to Clarity: Embeddings at Work”

What metrics assess LinkedIn feed relevance and engagement?

CTR, dwell time, and diversity indices—holistic gauges of value delivered. Heading: “Beyond Likes: True Engagement Metrics”

How does LinkedIn utilize deep learning to improve feed relevance?

Via MLPs and gating for feature interactions, trained on debiased data for unbiased personalization. Heading: “Deep Dive: Layers of Relevance”

How does multi-objective optimization work in LinkedIn’s feed ranking?

By weighting losses (e.g., relevance vs. speed) and using propensity scores to balance trade-offs. Heading: “The Art of Feed Balancing”

Can multi-task learning models enhance LinkedIn feed personalization?

Absolutely—reduces overfitting, lifts relevance by 20-30%. Heading: “Yes, and Here’s Why”

Should LinkedIn feed models consider multiple user actions simultaneously?

Yes, for holistic views; siloed models miss synergies. Heading: “Multi-Action Magic”

Are LinkedIn’s feed relevance improvements beneficial for all members?

Mostly, but cold starts linger; ongoing tweaks ensure inclusivity. Heading: “Wins for Everyone?”

FAQs

Conclusion: Why This Matters for Your LinkedIn Game

We’ve journeyed from sparse embeddings to scalable predictions, uncovering how LinkedIn homepage feed relevance optimization elevates every interaction. It’s not just better feeds—it’s empowered professionals, stronger networks, and careers that click.

Whether you’re a dev eyeing tools for building multi-task learning feed ranking systems or a user tweaking your profile for better recs, the takeaway is clear: Tech like this democratizes opportunity. Dive into LinkedIn’s engineering blog for more, experiment with your settings, and watch your feed transform. What’s one change you’ll make today? Share in the comments—let’s keep the conversation going.Visit CareerSwami for More.