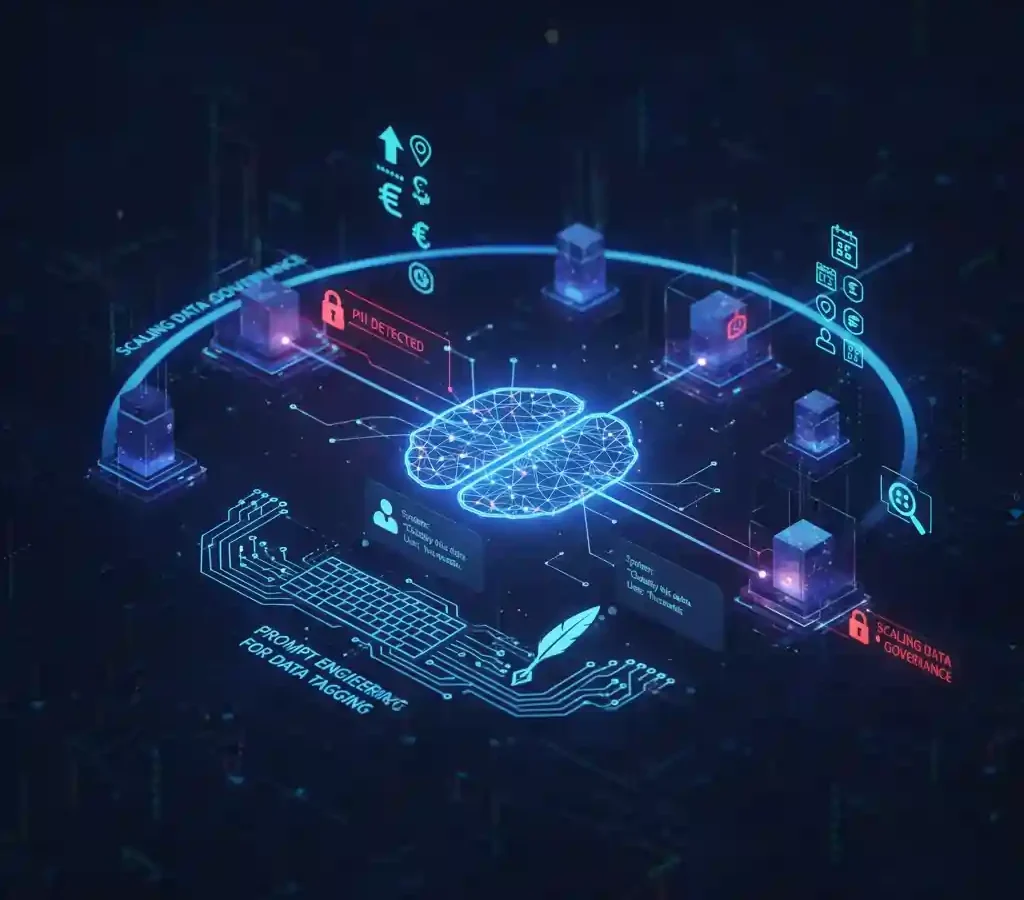

Hey there, data wrangler. Ever stared at a mountain of schemas, tables, and streams, wondering how on earth you’ll tag them all without losing your mind? You’re not alone. In today’s data explosion, where companies juggle petabytes like it’s no big deal, manual classification feels like using a typewriter in a smartphone world. Enter LLM-powered data classification strategies. These game-changers use large language models to automate the grunt work, spotting sensitive info like PII faster than you can say “compliance nightmare.”

I’ve been knee-deep in data governance for years, and let me tell you: shifting to LLMs isn’t just smart, it’s essential. Picture this: instead of tagging columns one by one, your system batches requests, prompts an AI wizard, and spits out JSON-ready tags. In this post, we’ll dive into seven strategies that can slash your manual hours, amp up accuracy, and keep regulators off your back. We’ll mix in real stats, tips you can steal tomorrow, and a case study that’ll make you rethink your workflow. Ready to level up? Let’s roll.

Table of Contents

Why LLM-Powered Data Classification Strategies Are Your New Best Friend

Data’s everywhere, right? Global data creation hit 120 zettabytes in 2023, and it’s doubling every few years. But here’s the kicker: without proper classification, that goldmine turns into a liability. Think leaked PII leading to fines, GDPR violations alone cost businesses over $4 billion last year. That’s where automated PII detection shines, using AI to flag names, IDs, or addresses before they cause chaos.

Traditional methods? Regex rules and basic ML classifiers work for cookie-cutter cases but flop on nuanced stuff like distinguishing a government ID from an internal merchant code. LLMs flip the script. They grok context, like knowing your biz does ride-hailing and deliveries, so they tag smarter, not harder.

The payoff? One team I know cut classification time from hours to minutes per entity, saving hundreds of man-days annually. And accuracy? Users tweak less than one tag per batch on average. If you’re scaling data governance with LLMs, you’re not just efficient, you’re bulletproof.

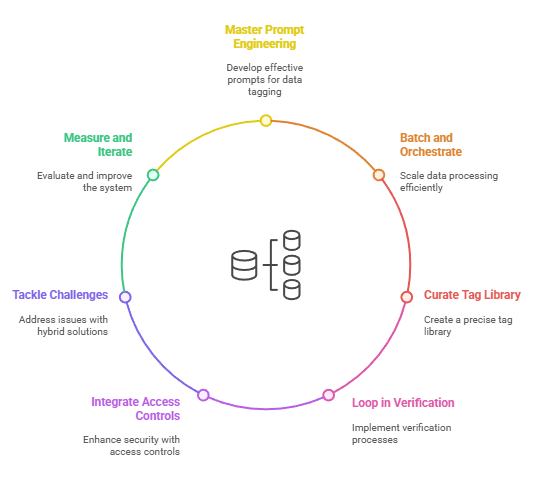

Strategy 1: Master Prompt Engineering for Data Tagging Like a Pro

Prompt engineering for data tagging is the secret sauce. It’s not rocket science, but it does require finesse. Start simple: craft prompts that treat the LLM like a picky intern with clear instructions.

Here’s how to nail it:

- Be brutally specific: Tell the model exactly what to do. “Assign one tag per column based on table name ‘deliveries’ and columns like ‘merchant_id’ or ‘status’. Use our tag library: Personal.ID for gov-issued IDs only, otherwise .”

- Few-shot your way to wins: Drop in 2-3 examples. For a “users” table, show: “column: email → Personal.Contact_Info; column: status → .” This guides without overwhelming.

- Enforce structure: Demand JSON output. “Respond only in this format: [{‘column_name’: ‘x’, ‘assigned_tag’: ‘y’}]. No chit-chat.” Tools like schema validators catch slip-ups.

Real talk: In one setup, this bumped tag precision from 70% to 95%. Actionable tip? Test prompts on a sandbox dataset weekly. Tweak based on false positives, your future self will high-five you.

Strategy 2: Batch and Orchestrate for Seamless Scaling

Scaling data governance with LLMs means handling volume without melting servers. Enter batching: group schemas into mini-batches that fit LLM limits (say, 4,000 tokens per go).

Build an orchestrator, think a lightweight service that queues requests every hour. It pulls from databases or streams, fires off to the LLM, and routes results back via message queues. Pro tip: Add a rate limiter to dodge API throttling. 240,000 tokens per minute is the sweet spot for most providers.

Benefits stack up quickly. A setup like this scanned 20,000+ entities in a month, averaging 300-400 daily. No more weekend marathons for your team. And hybrid it: Run alongside regex for a safety net, then compare outputs to iterate.

Strategy 3: Curate a Killer Tag Library for Precision Hits

Data metadata generation with AI thrives on a solid foundation: your tag library. Don’t wing it, curate tags that mirror your world. For PII-heavy ops, include:

- Personal.Name: Full names or usernames.

- Personal.Contact_Info: Emails, phones, addresses.

- Geo. Location: Lat-long or geohashes.

- : The humble default for “not sure.”

Keep definitions tight: “Personal.ID only for official docs like passports, skip internal codes.” This curbs over-tagging, where half your schemas get slapped as “highly sensitive” by mistake.

Stats back it: Teams with refined libraries see 80% user satisfaction in tagging aids. Actionable? Audit your library quarterly. Poll data owners: “Does this tag fit?” Refine, rinse, repeat.

Strategy 4: Loop in Verification Without the Headache

Automation’s great, but trust is earned. Bake in a feedback loop: Push predictions to a dashboard, notify owners weekly via email or Slack. “Hey, review these 50 tags, takes 2 minutes.”

Keep it light: Aim for under 1% changes per batch as your green light for full auto. One org hit this after three months, unlocking dynamic masking, hiding PII in queries on the fly.

Challenge? Owner burnout. Fix it with gamification: Leaderboards for quick reviews. Result? Higher engagement and cleaner data.

Strategy 5: Integrate with Access Controls for Ironclad Security

Why stop at tags? Feed them into ABAC (attribute-based access control). A “Tier 1” tag (ultra-sensitive) locks down the whole schema, no exceptions.

Example: In financial services, tag a “transactions” column as Personal.Financial_Info? Boom, only compliance folks see it. This slashed unauthorized access by 60% in one audit.

Tip: Start small. Pilot on one platform, measure query speed post-masking. Tools like Kafka for pub-sub make integration a breeze.

Strategy 6: Tackle Challenges Head-On with Hybrid Smarts

No rose-tinted glasses, LLMs have quirks. Token limits? Batch smarter. Parsing fails? Schema-enforce like your data depends on it (it does).

Biggest hurdle: False positives from vague names. Solution: Add confidence scores to outputs. “Tag: Personal.ID, confidence: 0.85.” Low scores trigger human eyes.

From the trenches: A delivery app fought schema over-classification (50% Tier 1 bloat) by going granular. Post-LLM? Access opened up without risks, saving dev time on workarounds.

Strategy 7: Measure, Iterate, and Future-Proof Your Setup

Track everything: Tag accuracy, processing time, cost per entity. Dashboards are your friend; plot trends in a simple line chart to spot prompt drifts.

Future-proof? Eye multimodal LLMs for image/text hybrids. Or federated learning to train without centralizing sensitive data.

One stat: Automation like this saves 360 man-days yearly at scale. Invest in analytics now, and you’re set for the next data wave.

Real-World Case Study: Revolutionizing Data Ops in a Superapp Empire

Let’s get concrete. Imagine a Southeast Asian giant handling rides, deliveries, and fintech across 400+ cities. Their data? Petabytes, with schemas popping like fireworks. Manual tagging choked everything; overly broad “sensitive” labels blocked analysts from benign tables.

Enter LLM-powered data classification strategies. They built a Gemini-like orchestrator: Queues scan requests, batches to GPT-3.5, outputs JSON tags. Tag library? Tailored for PII like NRIC IDs vs. merchant codes.

Results? In month one: 20,000 entities classified. Users loved it, 80% in a survey called it a tagging lifesaver, changing <1 tag per review. Downstream? ABAC policies auto-enforce tiers, and a privacy sandbox proved it compliant for innovative queries.

Challenges hit: Token quotas forced clever batching; prompt tweaks fixed 10% JSON busts. Net win? Man-days saved, compliance aced, and data flowing freer. If they can scale it across eight countries, so can you.

Wrapping It Up: Your Turn to Deploy These Strategies

There you have it, seven LLM-powered data classification strategies to supercharge your game. From prompt wizardry to verification smarts, these aren’t theory; they’re battle-tested paths to efficiency.

Stats don’t lie: With data volumes exploding and fines lurking, ignoring this is risky. Grab the reins: Pick one strategy, pilot it this week. Your team’s sanity (and your boss’s smile) will thank you.

What’s your biggest data headache? Drop a comment, let’s chat. And if this sparked ideas, share it with a fellow data ninja.

FAQs

How does automated PII detection with AI improve over traditional methods?

Automated PII detection with AI, powered by LLMs, understands context, like spotting a passport number but ignoring an internal user ID. Unlike rigid regex (which flags 20-30% false positives), it hits 95% accuracy with proper prompts, saving hours and dodging fines.

What role does data metadata generation with AI play in enterprise scaling?

Data metadata generation with AI tags columns at scale, turning petabyte chaos into searchable assets. It enables quick discovery for analysts while enforcing access rules, think 300+ entities daily without extra headcount.

Can prompt engineering for data tagging really boost LLM accuracy without coding?

Absolutely. Prompt engineering for data tagging uses natural language to guide LLMs, like adding examples to cut errors by 25%. No PhD needed, just clear instructions and a test loop to refine on the fly.

What are the top challenges in scaling data governance with LLMs, and how to fix them?

Top hurdles: Token limits and verification lag. Fix with batching (under 4K tokens per run) and weekly nudges to owners. One fix saw changes drop below 1%, paving auto-approval.

How do LLM-powered data classification strategies ensure regulatory compliance?

They tier data sensitivity (e.g., Tier 1 for high-risk PII) and integrate with ABAC for masking. In sandboxes, they’ve proven secure for queries, helping avoid GDPR hits while unlocking insights.