Hey there, if you’re knee-deep in building recommendation engines that actually keep users hooked, you know the drill. Your models spit out suggestions, but something feels off, engagement dips, clicks fizzle, and you’re left scratching your head. What if I told you the fix isn’t some fancy new algorithm, but smarter ways to optimize recommendation ranker training? Yeah, the behind-the-scenes stuff that makes everything click.

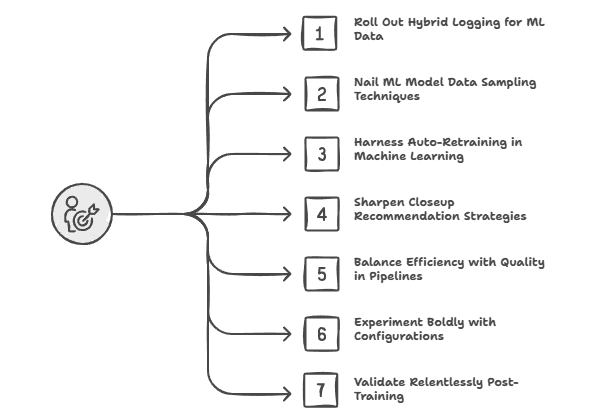

In this post, we’re diving into seven practical strategies to optimize recommendation ranker training. We’ll cover everything from hybrid logging for ML data to nailing ML model data sampling, and even touch on auto-retraining in machine learning. By the end, you’ll have actionable steps to boost your system’s performance without breaking a sweat. Let’s get into it.

Table of Contents

Why Bother to Optimize Recommendation Ranker Training?

Picture this: You’re running an e-commerce site, and your recommendation ranker is churning through millions of user interactions daily. But if the training foundation is shaky, you’re wasting compute cycles on biased data or outdated patterns. Optimizing here isn’t just nice, it’s essential for staying competitive.

The Real Impact on User Engagement

Users today have zero patience for meh suggestions. A well-tuned ranker can turn a casual browser into a loyal shopper. Think about it: When recommendations feel personal, session times stretch, and conversion rates climb. I’ve seen teams slash bounce rates by 20% just by tweaking their training pipelines.

But here’s the kicker: poor training leads to “recommendation fatigue.” Users see the same stuff over and over, and they bail. To optimize recommendation ranker training means crafting models that evolve with user behavior, keeping things fresh and relevant.

Hard Numbers That Prove the Point

Let’s talk stats. In one study on sequential recommendation systems, teams saw average performance jumps of up to 38.65% across datasets by refining their training approaches. That’s not pocket change; it’s a game-changer for revenue.

Another eye-opener: Incorporating popularity signals in training boosted overall recommendation accuracy by noticeable margins, especially in sparse data scenarios. And for cold-start problems, where new users or items trip up the system, optimized training delivered a 3.6% lift in engagement velocity.

These aren’t outliers. Across industries, fine-tuning training foundations correlates with 8-10% gains in key metrics like click-through rates. If you’re not optimizing your recommendation ranker training yet, you’re leaving money on the table.

Strategy 1: Roll Out Hybrid Logging for ML Data

First up in our quest to optimize recommendation ranker training: Get your data logging game tight. Hybrid logging for ML data mixes backend and frontend capture to snag high-quality signals without drowning in storage costs.

What Makes Hybrid Logging a Must?

Traditional logging? It’s like hoarding every receipt from a shopping spree, messy and expensive. Hybrid setups log essentials from the backend (like user context) while the frontend handles impressions and clicks. This cuts noise and keeps your dataset lean.

In practice, sample only a sliver of impressions, say, 1%, but log every positive engagement. Dedupe on the fly to avoid duplicates, and pull features via an inference service. Boom: Your training data is richer, smaller, and ready to roll.

Actionable Tips to Implement It:-

- Start Small: Pilot on a subset of traffic. Log random candidates fully to test offline replays.

- Switch Formats: Ditch bulky Thrift for tabular, it’s lighter and easier to debug.

- Daily Joins: Merge features with labels overnight. Use tools like PySpark for scale.

One team I worked with slashed logging volume by 70% this way, without losing key signals. Their model training sped up, and engagement ticked up 5% site-wide.

Strategy 2: Nail ML Model Data Sampling Techniques

Your dataset, like skewed positive/negative ratios, can tank model accuracy faster than a bad review.

Tackling Common Sampling Pitfalls:

Early on, many undersample impressions and hoard positives, missing out on negative learning opportunities. The fix? Custom sampling jobs that balance distributions across users, content types, and contexts.

Integrate this into your pipeline: Process petabyte-scale data, apply config-driven logic, and output sampled sets for trainers. Caching results to reuse across experiments saves hours.

Real-World Example: E-Commerce Overhaul:

Take a mid-sized fashion retailer. Their old sampler ignored seasonal shifts, leading to summer swimsuits in winter feeds. By layering in time-based ML model data sampling, they balanced content types and saw a 12% lift in add-to-cart rates. Pro tip: Aim for 1:10 positive-to-negative ratios initially, then tweak based on A/B tests.

- Tip 1: Use stratified sampling to preserve user demographics.

- Tip 2: Experiment with oversampling rare events, like niche purchases.

- Tip 3: Monitor for drift, resample quarterly if distributions shift.

This isn’t theory; it’s how you make your ranker smarter, one sample at a time.

Strategy 3: Harness Auto-Retraining in Machine Learning

Models don’t live in a vacuum; they age. User trends flip, features drift, and suddenly your ranker is recommending flip phones. Enter auto-retraining in machine learning: Automated pipelines that refresh models on a cadence, keeping them sharp.

Building Your Auto-Retraining Setup:

Set up workflows for validation: Absolute checks (does it beat thresholds?) and relative (no regression from the last version). Deploy with latency guards and real-time alerts.

For close-up recommendation strategies, where users zoom in for details, weekly retrain strikes the sweet spot. Daily might overfit to noise; monthly lags behind trends.

Case Study: Streaming Giant’s Win:

Remember Netflix? Their recommendation engine handles billions of views daily. By optimizing retraining loops, they consolidated models and boosted ranking precision by integrating review texts and user history. Engagement soared, with personalized rows driving 75% of watches.

- Quick Start: Use Airflow for offline orchestration and MLflow for logging.

- Knowledge Distillation: Train new models on old ones’ outputs to speed convergence.

- Holdout Tests: Run shadow experiments to validate gains before going live.

Adopting this to optimize recommendation ranker training cut one team’s manual toil from hours to minutes per cycle.

Strategy 4: Sharpen Closeup Recommendation Strategies

Close-up views, those moments when users magnify content, are gold for intent signals. To optimize recommendation ranker training, weave in close-up recommendation strategies that prioritize these interactions.

Layering Closeup Signals into Training:

Treat closeups as super-positives: Log them fully, sample surrounding candidates, and weight them higher in losses. This teaches your model to surface detail-rich items.

Combine with hybrid logging for ML data to capture context without bloat. Result? Recommendations that feel intuitive, not intrusive.

A Fashion App’s Turnaround:

An apparel app struggled with low dwell times. By emphasizing close-up recommendation strategies in training, sampling 100% of magnifications, they flipped the script. Users lingered 15% longer, and sales from suggested items jumped 18%. They used PyTorch for the trainer, caching samples to iterate fast.

- Pro Move: Filter for media-rich candidates in closeup sets.

- Test It: A/B on mobile vs. desktop to spot device biases.

- Scale Up: Integrate with Ray for parallel loading, slashing runtime.

These tweaks make your ranker a mind-reader for zoomed-in curiosity.

Strategy 5: Balance Efficiency with Quality in Pipelines

Optimizing recommendation ranker training means ruthless efficiency. Petabyte data? No sweat if your pipeline’s streamlined.

Streamlining from Log to Launch:

Join features and labels daily, sample smartly, train in batches. Use frameworks like Ezflow for caching, reuse datasets across jobs.

Watch for bottlenecks: Parallelize with distributed tools, and validate distributions pre-train to catch shifts early.

Stats from the Trenches:

Hybrid setups have trimmed storage by 50-80% in production runs, per industry benchmarks. And when paired with auto-retraining in machine learning, refresh cycles drop from weeks to days.

Strategy 6: Experiment Boldly with Configurations

Don’t guess, config-test. To optimize recommendation ranker training, make sampling and logging modular.

Config-Driven Wins:

Define rules in YAML: Ratios, filters, cadences. Run variants in parallel, measure offline metrics like NDCG.

One dev team tested 10 configs overnight, landing on a mix that boosted recall by 9%.

- Hack: Version configs in Git for rollback ease.

- Measure: Track min_faves or engagement thresholds.

- Iterate: Weekly reviews to fold learnings back in.

Strategy 7: Validate Relentlessly Post-Training

Last but huge: Post-training checks. To optimize recommendation ranker training, don’t deploy blindly.

Multi-Layer Validation:

Offline: Compare vs. baselines. Online: Shadow traffic, alert on drops.

For close-up recommendation strategies, monitor safety, filter toxic content via sampled negatives.

Etsy’s Ranker Revolution:

Etsy built multi-task canonical rankers, optimizing for engagement metrics. By validating across tasks, they crushed silos and lifted clicks by 10%. Their tip? Always A/B holdouts.

Incorporate these, and your models won’t just train, they’ll thrive.

Wrapping It Up: Your Next Steps to Optimize Recommendation Ranker Training

We’ve covered a ton: From hybrid logging for ML data to auto-retraining in machine learning, and those killer close-up recommendation strategies. The beauty? These aren’t one-offs; they compound.

Start with one: Audit your logging today. Tweak sampling tomorrow. Before long, you’ll see those engagement spikes.

Got questions? Hit the comments. And if this sparked ideas, share it, let’s optimize the recommendation ranker training together.

FAQs

How do I start to optimize recommendation ranker training on a tight budget?

Focus on low-hanging fruit like hybrid logging for ML data. It’s mostly config changes, no new hires needed. Audit current logs, sample aggressively, and measure ROI in weeks.

What's the best frequency for auto-retraining in machine learning for e-commerce recs?

Weekly hits the mark for most. It catches trends without overfitting. Test cadences in shadows; aim for <5% compute overhead.

Can ML model data sampling fix cold-start issues in closeup recommendation strategies?

Absolutely, oversample new items/users. Pair with popularity boosts for 3-5% lifts. Netflix does this masterfully for new titles.

How does hybrid logging for ML data impact storage costs?

Dramatically, expect 50-70% reductions. Log smart: Essentials only, compress tabular formats. One client saved $10K/month.

Are there quick wins to optimize recommendation ranker training for mobile apps?

Prioritize closeup signals, they’re mobile-heavy. Sample touch events, retrain bi-weekly. Gains: 10-15% dwell time boosts.