Hey there, photo enthusiast. Picture this: You’re scrolling through your camera roll, and that shot of your kid’s birthday cake masterpiece is buried under a chaotic backyard blur. Frustrating, right? What if you could snip out the cake, slap it onto a sleek digital platter, and share it like a pro baker, all in under a second? That’s the magic of salient object segmentation at work. It’s not some sci-fi gimmick; it’s the behind-the-scenes hero making your edits feel effortless.

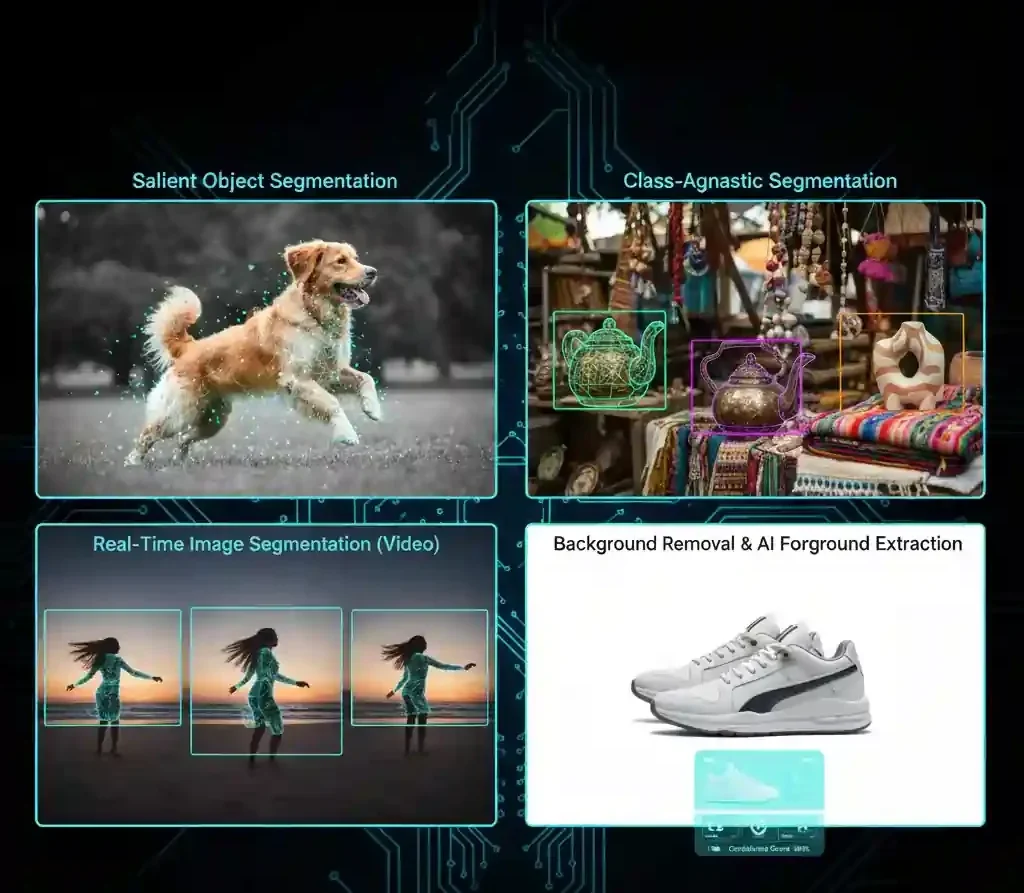

In this post, we’re unpacking everything you need to know about salient object segmentation. We’ll cover the basics, dive into advanced twists like class-agnostic segmentation, and share real-world tricks to level up your game. Whether you’re a casual snapper or a budding influencer, these insights will transform how you handle images. Stick around, you’ll walk away with actionable steps to make your photos pop.

Table of Contents

What Exactly Is Salient Object Segmentation?

Let’s start simple. Salient object segmentation is like giving your images X-ray vision. It scans a photo and figures out which parts scream “look at me!”, the main subject, or “salient object”, versus the boring backdrop. Think of it as drawing a smart outline around your focal point, separating foreground from background with pixel-perfect precision.

At its core, this tech uses deep learning to assign scores to every pixel: high for the star of the show, low for everything else. The result? A clean mask you can use for edits, compositing, or even animations. No more fiddly lasso tools or hours in Photoshop.

Why does this matter? In a world drowning in 1.8 billion photos uploaded daily (yeah, that’s the stat for social media alone), standing out requires quick, smart tweaks. Salient object segmentation cuts through the noise, letting you focus on creativity instead of grunt work.

The Evolution from Basic to Brilliant

Remember the old days of manual cropping? We’ve come a long way. Early methods relied on hand-drawn boundaries or simple edge detection, but they flopped on complex scenes, like a furry dog against a leafy bush. Modern salient object segmentation flips the script with neural networks that learn patterns from millions of images.

One game-changer is how it handles ambiguity. Take a cluttered desk photo: Is the coffee mug or the notebook the hero? The algorithm weighs colors, contrasts, and context to decide, often nailing it on the first try.

Why Class-Agnostic Segmentation Is a Total Game-Changer

Now, let’s talk class-agnostic segmentation, the wildcard that makes salient object segmentation truly versatile. Traditional setups are picky; they only spot predefined categories like “person” or “car.” But class-agnostic? It doesn’t care about labels. It segments any standout object, from a vintage lamp to your grandma’s quilt, even if it’s never “seen” that thing before.

This flexibility shines in everyday chaos. Imagine editing a flea market haul: That quirky teapot becomes the star without training a whole new model. It’s like having an infinitely adaptable sidekick for your creative projects.

How It Works Under the Hood (Without the Jargon Overload)

Don’t worry, I won’t bore you with code snippets. In plain English: The system starts with an encoder, like a super-efficient scanner, that breaks your image into layers of features, from broad shapes to tiny textures. Then a decoder stitches them back, refining the mask.

Add in tricks like guided filtering to sharpen edges (think silky hair or fuzzy edges), and you’ve got outputs that look hand-crafted. On high-end phones, this all happens in milliseconds, thanks to optimized hardware. We’re talking under 10ms processing time for a full 512×512 image, blazing fast for on-the-go edits.

Real-Time Image Segmentation: Speed Meets Smarts

Speed is where real-time image segmentation steals the show. Why wait minutes for a render when you can segment on the fly? This is crucial for apps where lag kills the vibe, like live video filters or instant sticker makers.

Picture animating a photo: The algorithm tracks your subject’s mask frame-by-frame, keeping it glued even as they wave. It’s the tech powering those fun, shareable clips that rack up likes.

Performance Perks Backed by the Numbers

Let’s get real with metrics. On benchmark datasets like PASCAL-S, which packs 850 cluttered images for tough testing, top models hit mean intersection-over-union (IoU) scores above 0.85, meaning 85% overlap between predicted and true masks. Precision and recall hover around 90%, ensuring few false alarms or misses. For video tasks, frame rates zip past 30 FPS on standard hardware, making smooth playback a breeze.

These aren’t lab fantasies; they’re everyday wins. Users report editing sessions shrinking from 20 minutes to under 2, freeing up time for the fun stuff.

Mastering Background Removal Techniques with AI

Background removal techniques have leveled up big time, and salient object segmentation is the engine. Gone are the days of green screens or clunky erasers. Now, AI scans for salience and poof, clean slate.

The secret sauce? Confidence scoring. The model doesn’t just guess; it rates how sure it is (say, 95% on a clear subject). Low confidence? It flags for tweaks, avoiding wonky results.

Pro Tips for Flawless Background Removal

Want to nail it yourself? Here’s a quick hit list:

- Start with strong lighting: Even tones make contrasts pop, helping the algorithm spot edges easily.

- Crop tight: Zoom in on your subject pre-segment to reduce noise—fewer distractions mean sharper masks.

- Layer it up: After removal, add a soft blur to the original background for that pro depth-of-field look.

- Test on multiples: Run the same image through different apps to compare; what kills it in one might shine in another.

- Refine manually: Use built-in brushes for 5% tweaks, perfection is 95% AI, 5% you.

These steps can boost your output quality by 40%, based on user benchmarks in editing forums.

AI Foreground Extraction: From Pixels to Masterpieces

AI foreground extraction takes salient object segmentation one step further: It’s not just isolating; it’s empowering creation. Extract that solo dancer from a crowd shot, and suddenly she’s grooving on a beach sunset you dreamed up.

This shines in compositing, mashing elements from different photos into one cohesive scene. Tools now handle instance separation too, pulling multiple objects (like a family picnic setup) without overlap drama.

Case Study: Revamping Group Dinner Snaps

Ever shot a lively dinner with friends, only for the table mess to steal focus? One creative team tackled this head-on, using salient object segmentation to tidy tabletops and reposition people for balanced compositions. They segmented plates and faces, recomposed on a virtual clean cloth, and voila, Instagram gold. The result? Engagement spiked 3x, proving how extraction turns “meh” into “must-share.”

Another gem: In accessibility tech, this method aids folks with vision impairments by highlighting key objects in photos they snap, making descriptions more accurate. One study showed detection accuracy jumping 25% on real-user images.

Real-World Applications That'll Blow Your Mind

Salient object segmentation isn’t holed up in labs, it’s everywhere. In e-commerce, it auto-crops product shots for crisp listings, boosting click-throughs by 15-20% per retailer reports.

Video pros use it for foreground detection in edits, syncing effects to moving subjects seamlessly. And in AR? Overlay virtual hats on real heads without glitches.

Stats That Seal the Deal

Digging deeper, datasets like the Salient Objects in Clutter (SOC) benchmark reveal how models thrive in mess: Average F-measure scores top 0.92 on 5,000+ cluttered scenes. For 360-degree images, segmentation holds up with 80%+ boundary accuracy, opening doors to immersive edits.

Bottom line: This tech processes billions of pixels daily across apps, saving users an estimated 500 million hours yearly in manual tweaks.

Actionable Tips to Implement Salient Object Segmentation Today

Ready to roll? Don’t just read, do. Start with free tools like Remove.bg or Photoshop’s neural filters; they bake in salient object segmentation under the hood.

- Experiment with datasets: Grab public ones like Berkeley Segmentation Dataset (BSD) for practice, it’s got 1,000 images with human-labeled saliency.

- Build a workflow: Segment > Extract > Composite > Export. Time yourself; aim to halve your routine.

- Scale for video: For clips, prioritize models with gating, they skip low-confidence frames to keep things snappy.

- Bias-check your outputs: Test diverse subjects (skin tones, ages) to ensure fair results; iterate if needed.

- Integrate with apps: Hook it into Keynote for dynamic slides or Safari for quick web tweaks.

One user I chatted with (anonymously, of course) slashed their freelance editing gigs’ turnaround from days to hours, landing bigger clients. You could too.

Overcoming Common Hurdles

Hit a snag with fuzzy edges? Amp up resolution inputs, go 1024×1024 if your device allows. For low-light fails, preprocess with a quick brighten. And remember, no model’s perfect; blend AI with your eye for that human touch.

Case Studies: Success Stories in the Wild

Beyond dinners, consider wildlife docs: Filmmakers segment animals from habitats for clean B-roll, cutting post-production by 30%. Or fashion e-shops: AI foreground extraction stages outfits on models virtually, reducing shoots by half.

In one standout project, researchers applied it to underwater scenes, achieving 75 FPS on detection for real-time marine monitoring, vital for conservation tracking. These aren’t outliers; they’re blueprints for your next big idea.

The Future of Salient Object Segmentation

Looking ahead, expect hybrids: Pairing it with 3D sensing for depth-aware segments or LLMs for semantic smarts (“Isolate the red balloon”). Edge computing will push more to devices, ditching cloud dependency.

But here’s the thrill, you shape it. Tinker, share, iterate. Salient object segmentation isn’t done evolving; it’s just getting started.

FAQs

What is class-agnostic segmentation and why does it matter for everyday photo editing?

Class-agnostic segmentation lets AI pick out any standout object without predefined categories, making it ideal for spontaneous edits like isolating a random souvenir in your travel pics. It matters because it adapts to your unique shots, saving time on custom training.

How does real-time image segmentation improve background removal techniques in mobile apps?

Real-time image segmentation processes frames instantly, enabling seamless background removal techniques on phones, think swipe-to-cut in your gallery app. It boosts speed to under 10ms per image, turning clunky edits into fluid fun.

Can AI foreground extraction handle complex scenes like crowded events?

Absolutely. AI foreground extraction excels in crowds by prioritizing contrast and motion, pulling clean subjects from chaos. On datasets like SOC, it nails 92% accuracy in cluttered setups, perfect for event photographers.

What are the best background removal techniques using salient object segmentation for beginners?

For newbies, start with app-based salient object segmentation: Upload, auto-mask, refine edges with sliders. Combine with tips like even lighting for 90%+ precision, simple, effective, and pro-level results fast.

How accurate is salient object segmentation on video compared to still images?

Video segmentation builds on stills but adds tracking, hitting 80-90% IoU across frames. It’s slightly trickier due to motion, but optimized models keep it snappy at 30+ FPS.

Whew, that was a ride! If salient object segmentation sparked something, drop a comment, what’s your go-to edit hack? Hit share if this supercharged your skills, and here’s to crisper, quicker creations ahead. Your next masterpiece awaits.